RSS is dead

(The story of github.com/zserge/headline).

About seven years ago Google decided to shut down Google Reader, a world known and beloved RSS reader, and I think no other service has been so genuinely lamented since then. I remember the warm feeling when I opened Reader on a tiny HTC Wildfire that fit well in my palm and read stories from dozens of blogs of interesting and unusual people I was subscribed to.

Of course, I tried Feedly and Inoreader and many other alternatives, even installed Tiny Tiny RSS on my server, but nothing felt the same. After many years I found that I don’t ready RSS anymore, but rather get my news from Twitter, HN or Reddit. Sad.

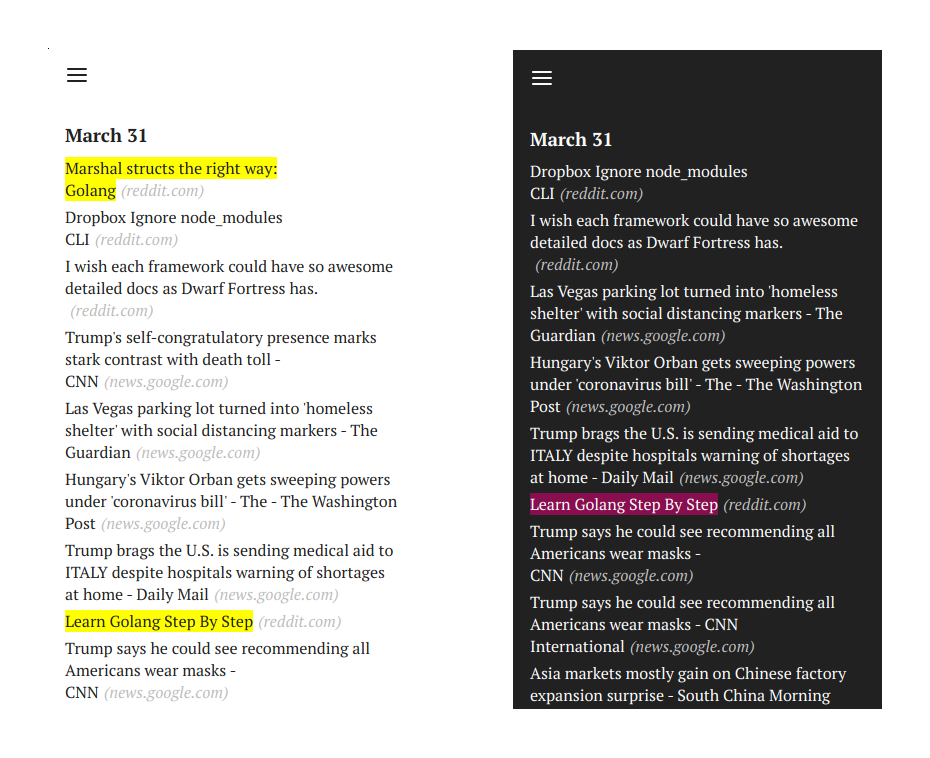

So I though that maybe it’s time to make an RSS reader of my own, the one that would fit my personal needs better than the others, and maybe this will bring back my good old habit of reading RSS. The picture above is the result.

Past

So it all started several years ago. It was clear that web would become a dominating technology, so I took some jQuery, added some CSS and created a tiny HTML page that downloaded RSS content via AJAX, parsed it and printed on screen the titles. I was curious about minimalism back then and that page only displayed brief news headlines with nice clear typography. Even to add another blog I had to edit the HTML source itself. The good thing is that my RSS reader page never collected any personal information, did require any logins and was extremely easy to use.

The short function that did all the parsing for RSS and Atom feeds was something that still amazes me, how simple it was (converted to ES6):

function parseFeed(text) {

const xml = new DOMParser().parseFromString(text, 'text/xml');

const map = (c, f) => Array.prototype.slice.call(c, 0).map(f);

const tag = (item, name) =>

(item.getElementsByTagName(name)[0] || {}).textContent;

switch (xml.documentElement.nodeName) {

case 'rss':

return map(xml.documentElement.getElementsByTagName('item'), item => ({

link: tag(item, 'link'),

title: tag(item, 'title'),

timestamp: new Date(tag(item, 'pubDate')),

}));

case 'feed':

return map(xml.documentElement.getElementsByTagName('entry'), item => ({

link: map(item.getElementsByTagName('link'), link => {

const rel = link.getAttribute('rel');

if (!rel || rel === 'alternate') {

return link.getAttribute('href');

}

})[0],

title: tag(item, 'title'),

timestamp: new Date(tag(item, 'updated')),

}));

}

return [];

}

Suddenly, more and more blogs become unreachable to my lovely reader. The reason was CORS, which made it impossible to fetch RSS/Atom XMLs from client-side javascript from another origin. So I edited my HTML news page to use some public CORS proxies.

As those started to die, I have written my own in Go, which was the language I was learning back then. Here’s how it looks like:

package main

import (

"flag"

"io"

"log"

"net/http"

"net/url"

)

func main() {

addr := flag.String("addr", ":8080", "Address to listen on")

flag.Parse()

http.HandleFunc("/", func(w http.ResponseWriter, r *http.Request) {

w.Header().Add("Access-Control-Allow-Origin", "*")

w.Header().Add("Access-Control-Allow-Methods", "GET, PUT, PATCH, POST, DELETE")

w.Header().Add("Access-Control-Allow-Headers", r.Header.Get("Access-Control-Request-Headers"))

u := r.URL.Query().Get("u")

if _, err := url.ParseRequestURI(u); err != nil {

http.Error(w, "bad URL", http.StatusBadRequest)

return

}

req, err := http.NewRequest("GET", u, nil)

if err != nil {

http.Error(w, err.Error(), http.StatusInternalServerError)

return

}

req = req.WithContext(r.Context())

req.Header.Set("User-Agent", r.Header.Get("User-Agent"))

res, err := http.DefaultClient.Do(req)

if err != nil {

http.Error(w, err.Error(), http.StatusInternalServerError)

return

}

defer res.Body.Close()

for k, v := range res.Header {

for _, s := range v {

w.Header().Add(k, s)

}

}

w.WriteHeader(res.StatusCode)

io.Copy(w, res.Body)

})

log.Fatal(http.ListenAndServe(*addr, nil))

}

A combination of a personal CORS proxy and an HTML file for consuming the news worked pretty well, but somehow I stopped using it.

Present

Recently I thought that I still want to follow on the bloggers, that are not so known for Twitter to recommend them, but are still interesting to me. So I decided to revive the Headline.

Looking at the old-school jQuery felt weird these days, so I took my tiny React clone and quickly converted it into a single-page application. I decided that it should let users add/remove feeds without forcing them to edit HTML, also I added some animations and made news fetching an async operation so that user would never have to reload the page.

But after I started using it on my laptop and my phone - I thought that maybe a simpler and dumber approach was the right one.

Do I ditched my JSX and created initial layout straight in the HTML. I used <template> sections to define layout of dynamically added elements, like news headlines or items in the feed list. I left only one screen and reduced the animations. Basically I was thinking - how would this be implemented if I were to write it ten years ago, but with modern technologies. And you know what, ES6 is great even without frameworks. CSS is great even without preprocessors. Sometimes simpler tools give better results.

So the Headline was rewritten, it takes nearly 4KB of code and loads instantly even on my phone with slow network.

Future

As everyone is talking about progressive web applications, I decided to turn Headline into a PWA. I was not hard, with Lighthouse and tons of documentation. Now I can read my last cached news even when I’m offline. The future is now.

However, Webkit and Apple would now erase all the local storage if the app has not been used for some time. Which means user will lose not only the cached news, but also the precious list of feeds. This was unacceptable, but I found a quick workaround - I serialize a list of feeds into the URL. So if you bookmark a Headline page - you keep all your associated feeds always with you. Moreover, you can create feeds on the desktop, convert the URL into a QR code, open it on the mobile and that’s how you can sync two devices together without any need for a backend.

The other futuristic problem was how to reduce the number of posts in the headline feed, because the amount was sometimes overwhelming. I decided to try natural language processing approaches to filter news by user interests. The first problem here would be to figure out what user finds interesting. The links he clicked? But I often read the headlines and don’t click, because the news is clear to me, still, I find it interesting.

But even if we get a list of the headlines interesting from the user’s perspective, how do we find related news? I tried every approach I was aware of, from TD-IDF and “Bag of Words” to word2vec. I used common word2vec model and I trained my own one based on the HN and Reddit titles to make it domain-specific. But in every case the best I was getting was basically filtering by keywords. Synonyms were very close from reality. Imagine the related words for Apple, Swift, Go, Sketch - they all highly depend on the context and the context is almost impossible to extract from a headline of ten words. I still don’t give up a hope to make it work some day, but for now I gave up.

Instead I implemented a literal keyword filtering. One can give a list of words and regexps to highlight - and Headline will highlight the matching titles for you. Simple, fast and predictable.

So I’m back to using RSS daily. Is it a pleasant experience? Rather yes, than no. But to me RSS looks more undead, than dead. For those who remember RSS from its past glory it may seem alive forever. For those who don’t - probably social networks and AI would feel more natural.

Would you like to give Headline a try? You may have a look at zserge.com/headline. But if you found an issue or would like to customize it - the sources are on Github, pull requests are always welcome!

Technologies don’t go extinct, and RSS is definitely not dead. Thanks for reading, and may you only get good news!

I hope you’ve enjoyed this article. You can follow – and contribute to – on Github, Mastodon, Twitter or subscribe via rss.

Mar 30, 2020

See also: Let's make the worst React ever! and more.